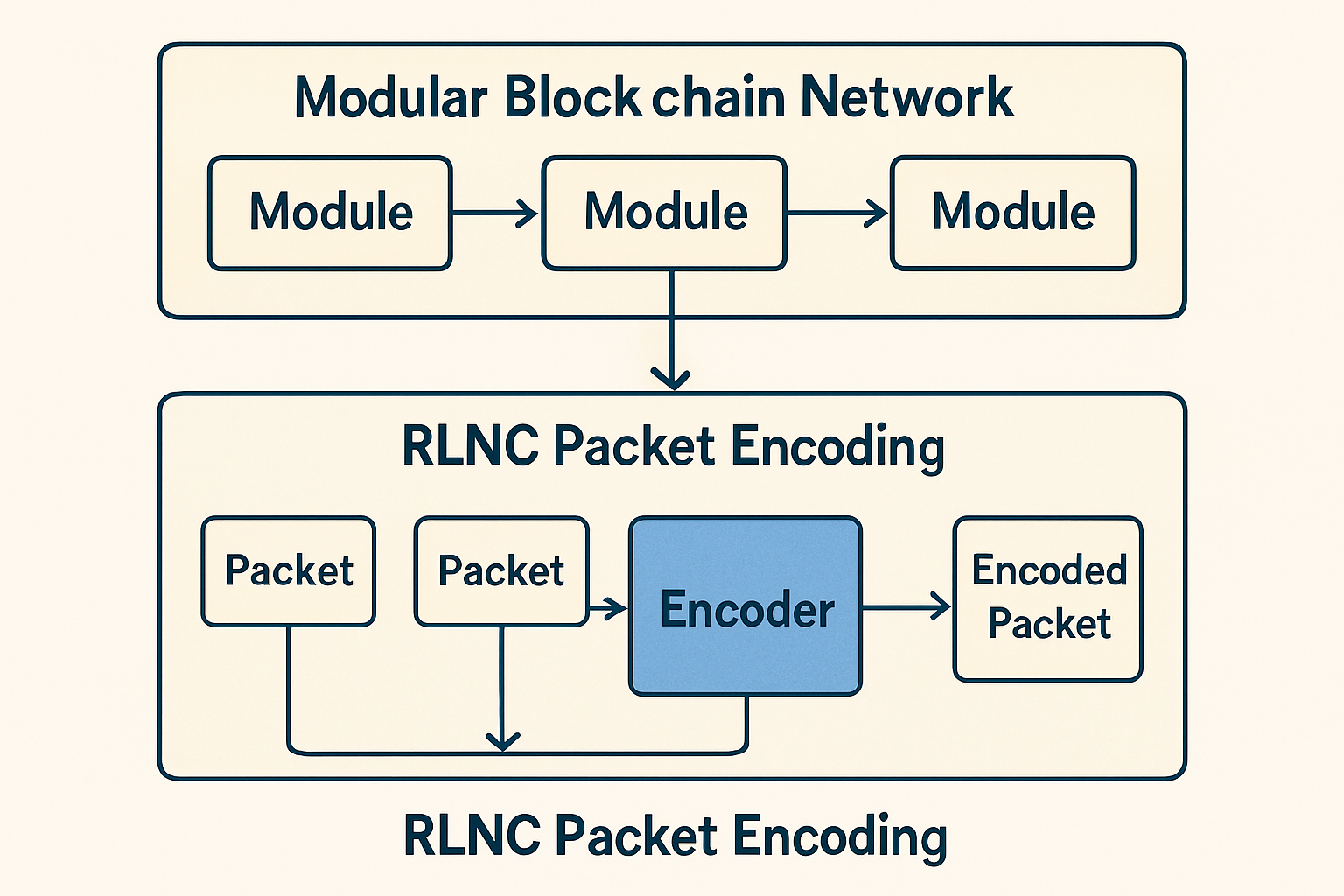

Random Linear Network Coding (RLNC) is rapidly emerging as a pivotal force in the evolution of data availability layers for modular blockchains. As decentralized networks scale, the challenge of ensuring that data remains accessible, verifiable, and efficiently transmitted becomes more acute. RLNC addresses these pain points by leveraging advanced coding theory to encode data into random linear combinations, allowing nodes to reconstruct the original information even if some packets are lost or corrupted. This paradigm shift is not just academic – it is actively being integrated into next-generation blockchain infrastructure, fundamentally changing how data is distributed and sampled across decentralized systems.

Why Modular Blockchains Need Robust Data Availability

Traditional blockchains require every node to download and store every transaction, resulting in significant resource consumption and bottlenecked scalability. Modular blockchains disrupt this model by decoupling core functions – consensus, execution, settlement, and crucially, data availability – into separate layers that can independently optimize for performance and security. The data availability layer ensures that all transaction data for a block is accessible to anyone who wishes to verify it, without requiring every participant to store the entire dataset.

This separation introduces new challenges: how can lightweight nodes verify that all transaction data exists without downloading everything? Enter RLNC. By encoding blocks using random linear combinations of smaller chunks (packets), RLNC enables nodes to sample just a fraction of the network traffic yet still confidently attest to the complete availability of the underlying data. This approach not only slashes bandwidth requirements but also enhances resilience against network failures or malicious actors attempting to withhold information.

How RLNC Works: Unpacking Random Linear Network Coding

At its core, RLNC transforms traditional data transmission by making each packet a random linear combination of original message fragments. This means that any sufficiently large subset of received packets can be used to reconstruct the complete dataset through mathematical decoding techniques (typically via Gaussian elimination). In practice, this has several transformative effects on blockchain networks:

- Interchangeability: Nodes no longer need specific packets; any combination will suffice for reconstruction.

- Redundancy with Efficiency: Packet loss or targeted censorship becomes far less effective since missing pieces can be recreated from others.

- Sampling Power: Verifiers can check small samples with high statistical confidence that all underlying data is available.

This makes RLNC especially attractive for projects like Celestia and Optimum that are building scalable DA layers for modular blockchain ecosystems. For example, Celestia utilizes erasure coding (a close cousin of RLNC) in its data availability sampling protocol; meanwhile, Optimum has raised significant capital to develop high-performance memory layers based on RLNC technology (read more about these integrations here).

The Security and Scalability Edge: Why RLNC Matters Now

The move toward modular DA architectures has forced a re-examination of how blockchains ensure both security and scalability at scale. With RLNC in play, attackers face dramatically increased difficulty when attempting censorship or selective withholding attacks – since every packet contributes equally toward reconstruction, adversaries must disrupt a far greater portion of network traffic than before.

This resilience translates directly into practical benefits for developers and users alike:

- Lighter Clients: Light nodes can participate meaningfully in consensus by sampling coded packets rather than syncing entire chains.

- Burst Throughput: Networks can safely raise throughput ceilings without sacrificing verifiability or decentralization guarantees.

- Simplified Recovery: Data recovery becomes more robust across unreliable peer-to-peer environments typical in decentralized networks.

The Ethereum research community is actively exploring these advantages as part of its long-term roadmap toward scalable sharding and stateless clients (see related research here). As protocols like Optimum bring RLNC-based solutions closer to production readiness, we are witnessing a fundamental leap forward in how Web3 handles memory and state distribution at global scale.

Recent advancements in RLNC are also fueling a new wave of innovation around data recovery in decentralized networks. By leveraging the mathematical guarantees of random linear combinations, RLNC-based systems can tolerate higher rates of packet loss and network churn without compromising data integrity. This is particularly valuable for permissionless blockchains, where node participation is fluid and adversarial behavior is always a risk. The ability to reconstruct entire datasets from partial, randomly coded samples means that even if some nodes go offline or attempt sabotage, the network’s data availability remains uncompromised.

For developers, this translates into a more reliable foundation for building decentralized applications. Instead of architecting complex fallback mechanisms to address missing or delayed data, teams can rely on RLNC’s inherent redundancy and flexibility. As a result, the operational burden on both infrastructure providers and application developers is significantly reduced, enabling faster iteration cycles and more robust user experiences across modular blockchain ecosystems.

Real-World Deployments: RLNC in Action

The integration of RLNC into production-grade blockchains is no longer hypothetical. Optimum, led by MIT’s Dr. Muriel Médard, exemplifies this trend by developing what it calls Web3’s “missing memory layer. ” Optimum applies RLNC to encode blockchain state into interchangeable packets, allowing for rapid retrieval and seamless scaling as demand grows. This approach not only improves throughput but also enhances the trust model for light clients, a critical component as blockchain adoption expands beyond technical users.

Other projects are following suit by experimenting with hybrid protocols that blend RLNC with established consensus mechanisms like pBFT (Practical Byzantine Fault Tolerance), further strengthening resilience against both accidental failures and coordinated attacks. The Ethereum community’s ongoing research into stateless clients and sharding also highlights the growing recognition that advanced coding techniques like RLNC will be central to future scalability gains.

Leading Blockchain Projects Using RLNC & Erasure Coding

-

Celestia — Pioneering modular blockchain architecture, Celestia utilizes Reed-Solomon erasure coding for its data availability sampling, enabling light nodes to efficiently verify data without downloading entire blocks. This approach is foundational to Celestia’s scalable DA layer.

-

Optimum — Developed by MIT’s Dr. Muriel Médard, Optimum integrates Random Linear Network Coding (RLNC) to create a high-performance memory layer for blockchains, boosting data reliability and scalability across distributed networks.

-

Ethereum Research Community — The Ethereum research community actively explores RLNC-based data availability sampling as a next-generation solution for sharded and modular blockchains, aiming to enhance scalability and security for future Ethereum upgrades.

-

Polygon Avail — Polygon Avail leverages erasure coding to provide a robust, modular data availability layer for rollups and sidechains, ensuring data can be reconstructed even if parts are missing or withheld.

-

EigenLayer — EigenLayer is building restaking infrastructure that incorporates advanced erasure coding techniques for its data availability services, supporting secure and efficient off-chain data verification.

What Comes Next? The Future of Modular DA Layers

The trajectory for RLNC-enabled data availability layers points toward greater modularity, interoperability, and security across decentralized networks. As more blockchains adopt modular architectures, separating settlement, execution, consensus, and DA, RLNC will likely become a standard component in ensuring efficient state propagation and robust sampling guarantees.

Expect ongoing collaboration between academic researchers and industry practitioners to drive further optimization of RLNC schemes tailored for Web3 environments. Areas such as adaptive coding rates, dynamic sampling strategies, and integration with zero-knowledge proofs are especially promising for pushing the boundaries of what’s possible in decentralized data availability.

The promise of Random Linear Network Coding is not just theoretical, it is rapidly materializing as an essential tool in the arsenal of next-generation blockchains striving for unmatched scalability without sacrificing trustlessness or decentralization.

As we look ahead to a landscape where light clients interact seamlessly with massive distributed ledgers, and where bandwidth constraints no longer dictate security tradeoffs, RLNC stands out as a foundational technology powering this shift. For anyone building or researching in the modular blockchain space, understanding how RLNC transforms data availability isn’t optional; it’s mission-critical.